Assisted a public sector organization to migrate large volumes of data to the Hadoop platform easily

Hadoop Migration Cost-Effective Solution for E-governance Service

About the Client

The client is a public sector organization based in Australia. Moreover, the client’s primary focus is e-governance and administration services where the client is responsible to deliver government services to customers, businesses, and other government organizations. In dealing with e-governance services, the client regularly needs to process large volumes of data based on information contained in applications form of Information and Communication Technology (ICT).

The Business Challenge

The client wanted to process large batches of data quickly where the number of daily transactions is up to 15 million. Furthermore, the constant growth of e-governance projects was generating a large amount of data that was becoming historical every day and additional 8 TB data was getting added every month. Additionally, the client wanted to manage these large volumes of data and do analytical processing without performance issues.

What Aptus Data Labs Did

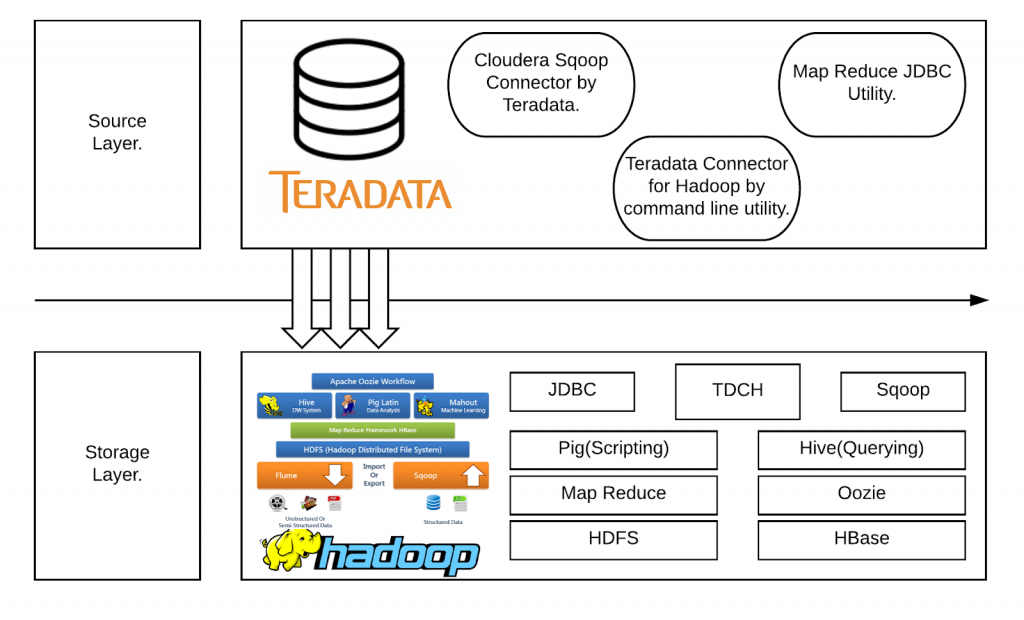

We migrated the client’s database to the Hadoop platform with multi-node cluster environment to process massive data. Moreover, we used basic hardware to reduce costs and distributed processing using Hive and MapReduce. Therefore, we also enabled the platform to hold only hot data (3 months) in Teradata and cold data (3+ months) in a Hadoop environment.

The Impact Aptus Data Labs Made

The new analytics platform reduced IT costs significantly. It also helped the client to handle large volumes of data without any performance breaks.

The Business and Technology Approach

Aptus Data Labs used the below process for data migration and to resolve the existing challenge. Aptus Data Labs:

- Prepared data that required migration by collecting source data table and data files of various business areas

- Used HDFS source to store the data file and process it

- Imported data files directly into Hive and loaded into HBase

- Used Sqoop to ingest data from Teradata into Hadoop platform

Work Flow

The workflow below high-level components of migration:

Tools Used

Hadoop

- HDFS

- Hive

- Hbase

- MapReduce

NDFS and Programming

- Teradata

- Linux

- Python

- Java

- Shell Scripting

The Outcome

The migrated Hadoop platform reduced the IT costs significantly as being an open-source system, there was no license cost. Moreover, the platform reduced initial investment as well as recurring costs related to handling large volumes of data by using commodity hardware. Additionally, the Hadoop platform, set up in a clustered framework, allowed new nodes to be added to the cluster to handle the ever-growing volume of data. Therefore, it also enabled the client to process massive volumes of data smoothly without any performance degradations.

Related Case Studies

Unlock the Potential of Data Science with Aptus Data Labs

Don't wait to harness the power of data science - contact Aptus Data Labs today and start seeing results.

If you’re looking to take your business to the next level with data science, we invite you to contact us today to schedule a consultation. Our team will work with you to assess your current data landscape and develop a customized solution that will help you gain valuable insights and drive growth.